The relationship between human psychology and algorithmic design represents one of the most profound shifts in how modern society processes information and makes decisions. Facebook’s algorithm, serving over 2.9 billion monthly active users, has evolved into a sophisticated psychological influence machine that shapes not just what we see, but fundamentally how we think, feel, and behave in digital and physical spaces.

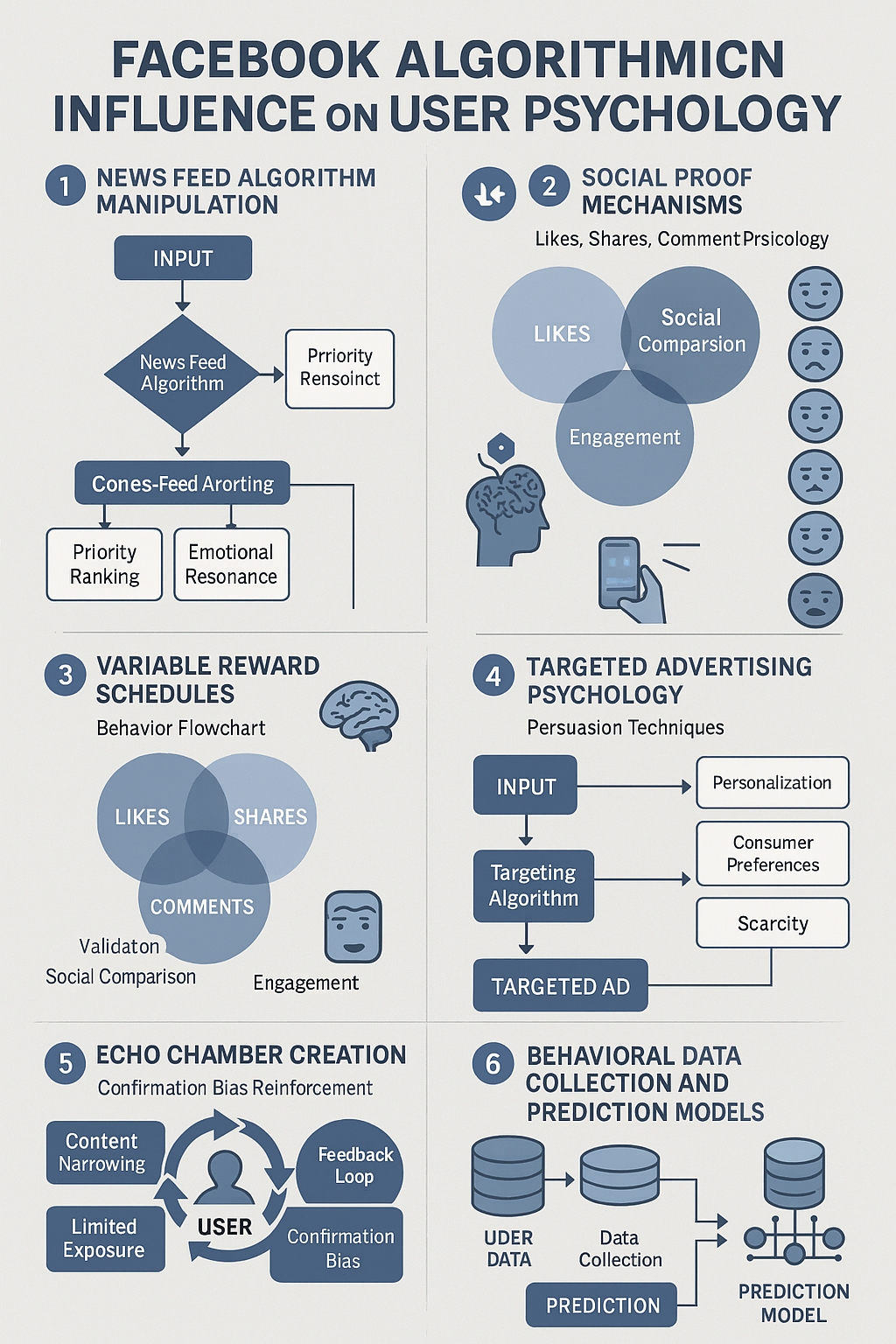

Understanding this psychological manipulation requires examining the intricate dance between human cognitive biases and algorithmic exploitation. Facebook’s system doesn’t merely present content randomly; it leverages decades of behavioral psychology research to create engagement patterns that maximize user attention and, consequently, advertising revenue. This process occurs through carefully orchestrated psychological triggers that operate below the threshold of conscious awareness.

The neurochemical foundation of Facebook’s influence lies in its ability to trigger dopamine release through variable ratio reinforcement schedules. Unlike traditional media consumption, where content delivery follows predictable patterns, Facebook’s algorithm introduces uncertainty into the reward system. Users never know when they’ll encounter something genuinely interesting, creating an addictive pattern similar to gambling mechanisms. This unpredictability keeps the brain’s reward system constantly activated, making users return compulsively to check for new content.

The algorithmic design exploits what psychologists call the “seeking system” in the brain, which drives exploration and information gathering behaviors. When users scroll through their feed, they’re essentially pulling a slot machine lever, never knowing whether the next post will provide meaningful social connection, entertainment, or validation. This uncertainty creates a psychological state where the anticipation of reward becomes more powerful than the reward itself, leading to extended engagement sessions that far exceed users’ original intentions.

Social proof mechanisms form another cornerstone of Facebook’s psychological influence architecture. The platform deliberately amplifies signals of social validation through likes, comments, shares, and reactions, creating artificial scarcity around social approval. When users see content with high engagement, they experience what psychologists term “informational social influence,” where they assume popular content must be valuable or correct. This bias becomes particularly pronounced when users encounter information that challenges their existing beliefs but appears to have widespread social support.

The algorithm’s understanding of social proof extends beyond simple engagement metrics. It analyzes the social relationships between users, giving higher priority to content from people within users’ social circles. This creates an echo chamber effect where opinions and behaviors spread through networks based on social proximity rather than factual accuracy or genuine relevance. The psychological impact is profound: users begin to perceive their social group’s opinions as representative of broader societal consensus, leading to polarization and confirmation bias reinforcement.

Facebook’s temporal manipulation strategies represent perhaps the most sophisticated aspect of its psychological influence system. The platform doesn’t just decide what content to show users; it carefully orchestrates when content appears to maximize emotional impact. Content that might generate strong emotional responses is often delivered during periods when users are psychologically vulnerable, such as late evening hours when decision-making capabilities are naturally diminished.

The algorithm tracks user interaction patterns to identify optimal psychological moments for content delivery. If a user typically engages more emotionally with political content after work hours, the system will preferentially serve such content during these windows. This temporal targeting creates artificial urgency around information consumption, making users feel they must immediately respond to or share content to maintain their social relevance.

Emotional contagion represents another powerful mechanism through which Facebook’s algorithm influences user behavior. Research in social psychology demonstrates that emotions spread through social networks, and Facebook’s system amplifies this natural phenomenon. The algorithm prioritizes content that generates strong emotional responses, regardless of whether those emotions are positive or negative. This creates feedback loops where emotionally charged content receives higher visibility, leading to increased emotional intensity across the platform.

The psychological impact of emotional contagion on Facebook extends beyond individual users to influence broader social movements and cultural trends. When the algorithm detects that certain emotional themes are generating high engagement, it amplifies similar content across the network. This can rapidly shift public discourse around specific topics, creating artificial consensus or controversy that may not reflect genuine public opinion.

Facebook’s algorithm employs sophisticated attention hijacking techniques that exploit fundamental aspects of human visual and cognitive processing. The system analyzes which visual elements, headline structures, and content formats capture user attention most effectively, then promotes content that matches these psychological triggers. This creates an arms race among content creators who must increasingly employ sensationalistic or emotionally manipulative tactics to achieve visibility.

The attention economy dynamics created by Facebook’s algorithm fundamentally alter how information producers approach content creation. Publishers and marketers have learned that content optimized for algorithmic promotion often differs significantly from content that provides genuine value to users. This misalignment creates a systematic bias toward information that exploits psychological vulnerabilities rather than serving users’ actual interests or needs.

| Psychological Trigger | Mechanism | Impact on Decision Making |

| Variable Ratio Reinforcement | Unpredictable content rewards | Creates addictive usage patterns, reduces critical thinking time |

| Social Proof | Engagement metrics and peer validation | Influences opinion formation and purchasing decisions |

| Loss Aversion | Fear of missing important updates | Drives compulsive checking behavior and FOMO |

| Authority Bias | Promoted content from verified sources | Affects credibility assessment and belief formation |

| Reciprocity Pressure | Social interaction expectations | Influences sharing and engagement behaviors |

The formation of filter bubbles through Facebook’s algorithm represents a complex psychological phenomenon that goes beyond simple content curation. The system creates personalized reality tunnels by analyzing user behavior patterns, preferences, and social connections to construct unique information environments for each individual. These environments become self-reinforcing as users’ interactions within their personalized feeds provide feedback that further narrows their exposure to diverse perspectives.

The psychological comfort provided by filter bubbles makes them particularly insidious. Users experience reduced cognitive dissonance when their beliefs are constantly reinforced, creating a false sense of certainty about complex issues. This artificial confidence affects decision-making processes in significant ways, from political voting patterns to consumer purchasing choices. Users become less capable of considering alternative viewpoints or acknowledging the limitations of their own knowledge.

Facebook’s algorithm exploits cognitive availability bias by ensuring that recent, emotionally salient information receives disproportionate weight in users’ mental models of reality. When making decisions, people naturally rely on information that comes easily to mind, and Facebook’s system ensures that certain types of information maintain high mental availability through repeated exposure and emotional amplification.

This manipulation of cognitive availability has profound implications for everything from risk assessment to social judgment. Users may overestimate the prevalence of rare but dramatic events while underestimating more common but less sensational risks. The algorithm’s emphasis on engagement-driving content creates systematic distortions in users’ understanding of probability, causation, and social norms.

The concept of psychological reactance becomes particularly relevant when examining how Facebook’s algorithm influences user autonomy and decision-making freedom. When users perceive that their choices are being constrained or manipulated, they may experience reactance that leads to opposite behaviors. However, Facebook’s influence operates largely below conscious awareness, making it difficult for users to recognize when their autonomy is being compromised.

The platform’s recommendation systems create an illusion of choice while actually narrowing the range of options users consider. This subtle manipulation is more effective than overt persuasion because it doesn’t trigger psychological reactance. Users believe they’re making independent decisions while actually operating within carefully constructed choice architectures designed to serve Facebook’s business interests.

Social comparison theory provides crucial insight into how Facebook’s algorithm affects users’ self-perception and decision-making processes. The platform’s emphasis on curated, positive content from social connections creates artificial standards for social comparison that bear little resemblance to reality. Users constantly compare their internal experiences with others’ external presentations, leading to systematic underestimation of their own well-being and life satisfaction.

These comparison dynamics influence consumer behavior, career decisions, and relationship choices as users attempt to match the artificial standards they observe in their feeds. The algorithm’s role in amplifying positive content from social connections creates competitive pressure that drives users toward increasingly performative behaviors, both online and offline.

| Cognitive Bias | Facebook’s Exploitation Method | Behavioral Outcome |

| Confirmation Bias | Personalized content feeds | Strengthened existing beliefs, reduced open-mindedness |

| Availability Heuristic | Repeated exposure to dramatic content | Distorted risk perception and probability assessment |

| Social Comparison | Curated positive content from peers | Increased materialism and status-seeking behavior |

| Anchoring Bias | First impression content placement | Influenced subsequent information processing |

| Sunk Cost Fallacy | Time investment tracking | Continued platform usage despite negative experiences |

The manipulation of social identity represents one of Facebook’s most sophisticated psychological influence techniques. The algorithm analyzes users’ identity markers, group affiliations, and value systems to deliver content that reinforces specific aspects of their self-concept. This targeted identity reinforcement creates emotional investment in maintaining consistency with the platform’s perception of who users are.

Identity-based targeting affects decision-making by making certain choices feel more authentic or consistent with users’ self-image. When Facebook’s algorithm consistently shows users content related to specific identity categories, it strengthens those identity commitments and makes related consumer, political, and social choices feel more natural and inevitable.

The psychological concept of commitment and consistency plays a crucial role in how Facebook’s algorithm shapes long-term user behavior. The platform tracks users’ past interactions and preferences to create implicit commitments to certain viewpoints, products, or social positions. Once users have engaged with content in specific ways, the algorithm uses this history to encourage consistent future behavior.

This commitment mechanism operates through what psychologists call the “escalation of commitment,” where users feel pressure to justify their previous choices by making additional similar choices. Facebook’s algorithm exploits this tendency by gradually introducing users to more extreme versions of content they’ve previously engaged with, leading to progressive radicalization or consumer behavior intensification.

Understanding the psychological impact of Facebook’s algorithm requires examining how it affects users’ relationship with uncertainty and ambiguity. The platform’s recommendation systems create an illusion of comprehensive knowledge by providing seemingly relevant information about any topic users express interest in. This artificial reduction of uncertainty affects users’ willingness to seek out additional sources of information or acknowledge the limitations of their knowledge.

The comfort provided by Facebook’s curated information environment reduces users’ tolerance for genuine uncertainty and complexity. This psychological shift affects decision-making processes by making users more confident in choices based on incomplete or biased information. The algorithm’s role in creating false certainty has implications for everything from medical decisions to financial investments.

Facebook’s algorithm also exploits temporal discounting biases that affect how users evaluate future consequences of their current decisions. The platform’s emphasis on immediate gratification through likes, comments, and shares creates psychological pressure to prioritize short-term social rewards over long-term personal interests. This bias affects not only online behavior but also offline decision-making patterns as users become accustomed to immediate feedback and validation.

The psychological conditioning created by Facebook’s reward systems influences users’ patience and persistence in other areas of life. When individuals become accustomed to immediate social feedback, they may struggle with activities that require delayed gratification or long-term commitment. This psychological shift has implications for educational achievement, career development, and relationship building.

| Decision-Making Domain | Algorithmic Influence | Psychological Mechanism |

| Consumer Purchases | Targeted advertising based on behavioral data | Social proof and scarcity manipulation |

| Political Opinions | Echo chamber reinforcement | Confirmation bias and group polarization |

| Career Choices | Success story amplification | Social comparison and availability bias |

| Relationship Decisions | Selective relationship content display | Unrealistic expectation formation |

| Health Behaviors | Wellness trend promotion | Authority bias and social influence |

The intersection of Facebook’s algorithm with users’ moral reasoning processes represents a particularly complex aspect of psychological influence. The platform’s content curation affects how users encounter ethical dilemmas and moral information, potentially influencing the development of moral intuitions and reasoning patterns. When the algorithm consistently exposes users to certain moral frameworks while filtering out others, it shapes the foundation upon which ethical decisions are made.

Moral psychology research indicates that people’s ethical judgments are heavily influenced by the information they’ve been exposed to and the social contexts in which moral issues are framed. Facebook’s algorithm becomes an invisible moral educator, determining which ethical perspectives users encounter and how moral issues are presented. This influence extends beyond online interactions to affect real-world ethical decision-making in personal, professional, and civic contexts.

The platform’s impact on users’ sense of agency and personal responsibility represents another crucial psychological dimension. Facebook’s algorithm creates environments where users feel their preferences and interests are being authentically reflected, when in reality their choices are being shaped by sophisticated behavioral analysis and manipulation. This illusion of authenticity affects users’ sense of personal responsibility for their beliefs and decisions.

When users believe their preferences are entirely self-generated, they may be less likely to question their assumptions or consider alternative perspectives. This psychological dynamic reduces critical thinking and self-reflection, making users more susceptible to influence while simultaneously increasing their confidence in their own judgment. The result is a population that feels highly certain about beliefs and decisions that have been systematically influenced by algorithmic manipulation.

Facebook’s algorithm also affects users’ understanding of causation and correlation, influencing how they interpret relationships between events and phenomena. The platform’s content curation creates artificial patterns in information exposure that can lead users to perceive causal relationships where none exist. This psychological distortion affects decision-making by providing false confidence in understanding complex systems and relationships.

The temporal clustering of related content can create illusions of causation that influence users’ beliefs about everything from health interventions to economic policies. When Facebook’s algorithm shows users multiple pieces of content about related topics in close temporal proximity, it exploits cognitive biases that lead people to assume causal relationships between temporally associated events.

The ultimate psychological impact of Facebook’s algorithmic influence extends to users’ capacity for independent thought and authentic self-expression. The platform’s sophisticated behavioral analysis and content optimization creates environments where users’ thoughts and preferences are constantly being shaped by external manipulation disguised as personal choice. This influence operates through the gradual modification of users’ information environments, social connections, and feedback mechanisms.

The long-term psychological consequences of algorithmic influence include reduced cognitive flexibility, increased susceptibility to manipulation, and diminished capacity for genuine self-reflection. Users may lose touch with their authentic preferences and interests as these become increasingly difficult to distinguish from algorithmically influenced desires. The result is a form of psychological dependence where users’ sense of self becomes intertwined with their platform experience.

Understanding the psychology of Facebook’s algorithmic influence reveals the need for greater awareness of how digital environments shape human cognition and behavior. The platform’s sophisticated exploitation of psychological vulnerabilities represents a fundamental challenge to individual autonomy and authentic decision-making. As these systems become more sophisticated and pervasive, the importance of recognizing and countering their psychological influence becomes increasingly critical for maintaining genuine human agency in an algorithmically mediated world.

The implications extend far beyond individual user experience to encompass broader questions about democracy, social cohesion, and human flourishing in digital societies. Facebook’s algorithm represents just one example of how technological systems can shape human psychology at scale, highlighting the urgent need for greater understanding and regulation of psychological manipulation in digital environments.

facebook-trends

facebook-trends